I watched a video earlier this week from Google’s Gemma Developer Day 2024. It was short (~12 mins long), the presenter used tools that are freely available (Llama.cpp, VS Code, and a laptop), and I was able to follow along with my paltry software engineering knowledge.

This was my takeaway: instead of using pre-defined data assets in your app, you can prompt an LLM to create that asset.

The actual point of the video is highlighting that Gemma can be run locally on your laptop. But this wasn’t the point that caught my attention. I actually haven’t seen any demos of LLMs dynamically creating app assets on-demand until this video. This was a “seeing is believing” experience for me.

Here are the key screenshots that made it real for me:

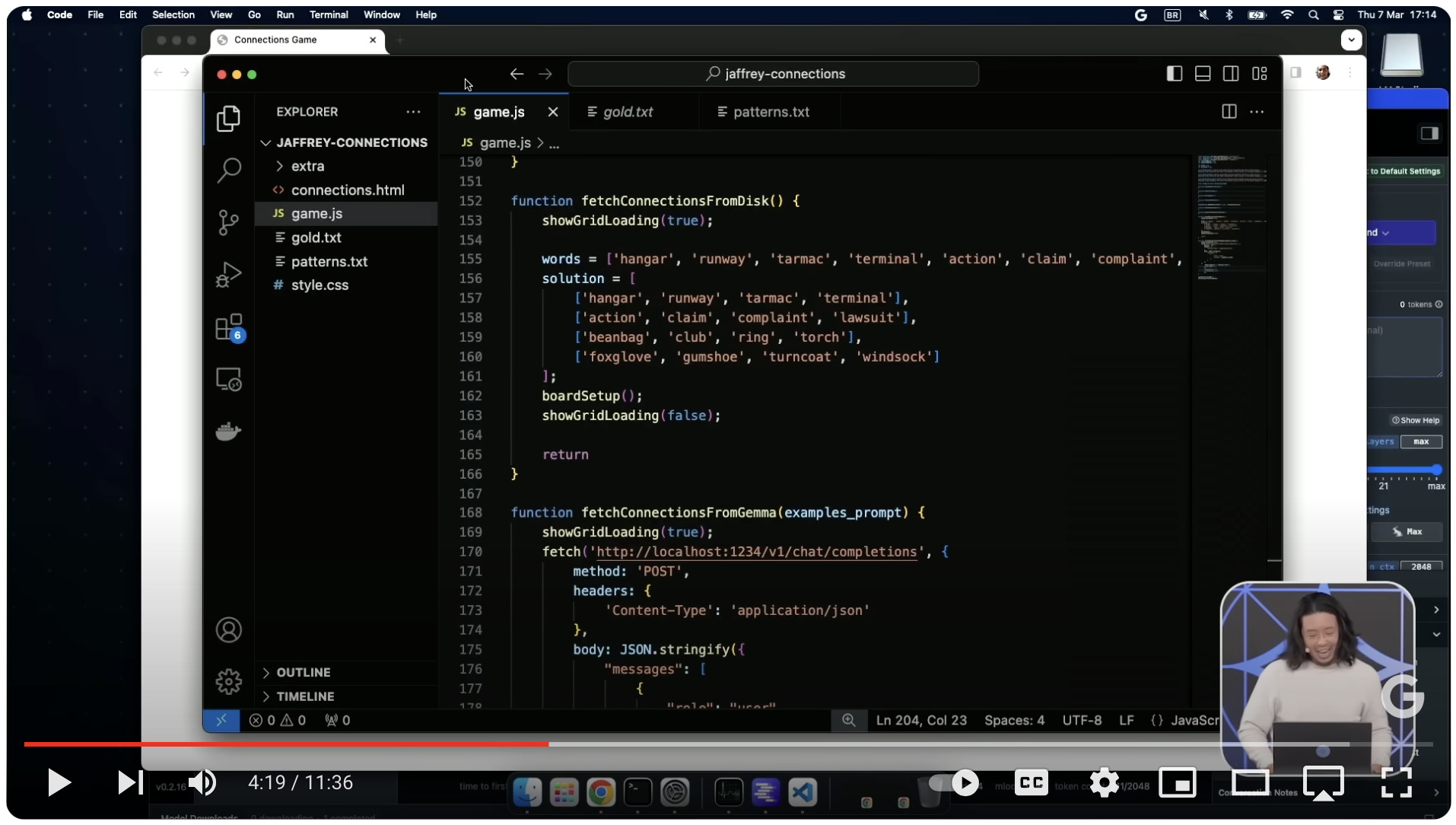

The demo in the video is based on some game called “Connections”. The game is created using function fetchConnectionsFromDisk(). The data assets that the game uses are hardcoded into the variables words and solutions.

In the olden days, you can improve replay-ability of the game by generating a ton of static assets. Here is where the LLM comes in. Instead of creating the game by disk, fetchConnectionsFromGemma() will generate the game assets.

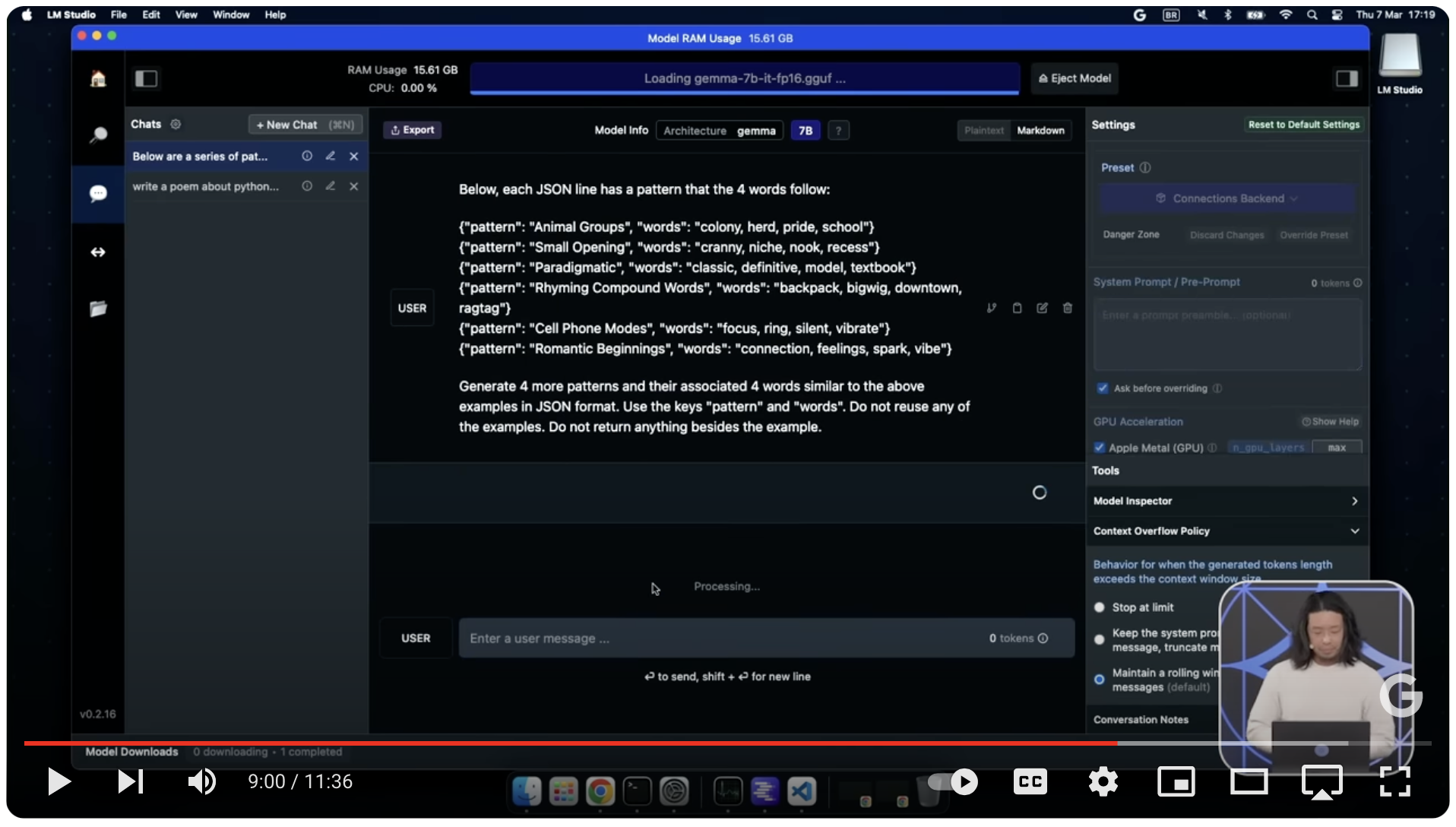

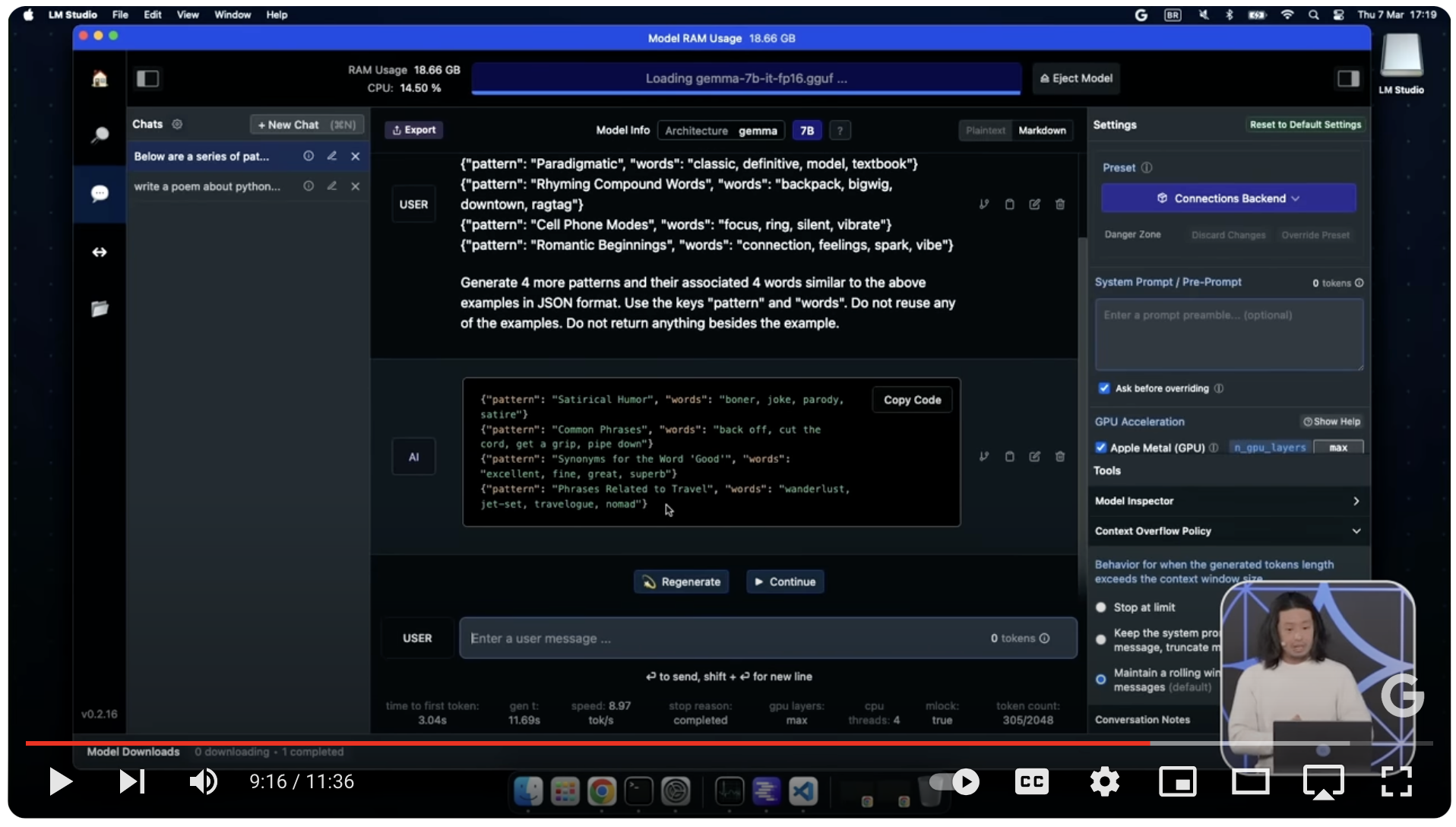

In those two screenshots, an LLM prompt is used to create the game assets as structured data in JSON. Some prompt engineering is required to ensure that the LLM knows enough about the data and the data is properly structured.

The key takeaways for me: [1] the LLM is dynamically generating the new game content on-demand and [2] this is an easy example to get started with using an LLM in an app.